C2S-Scale Preprint released!

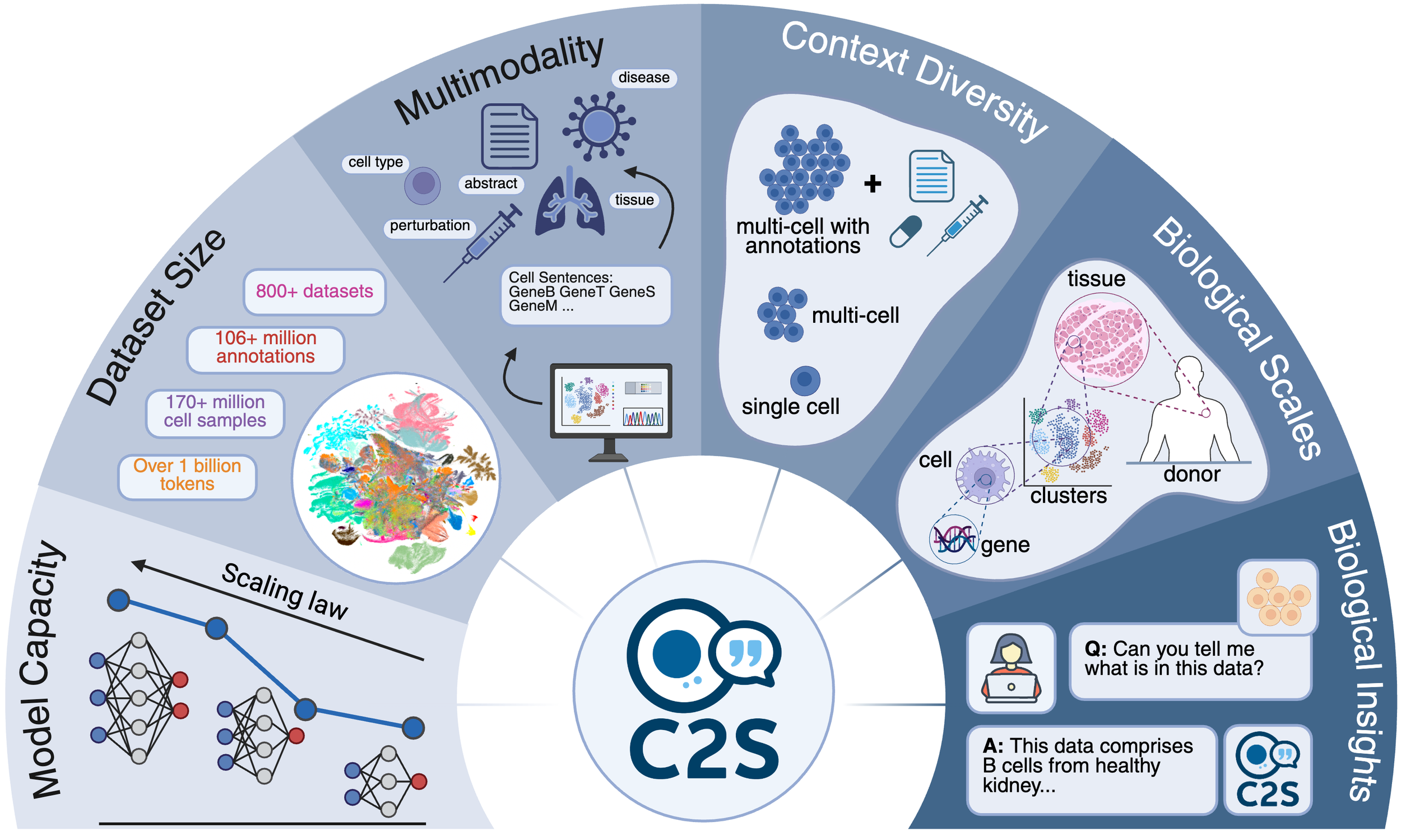

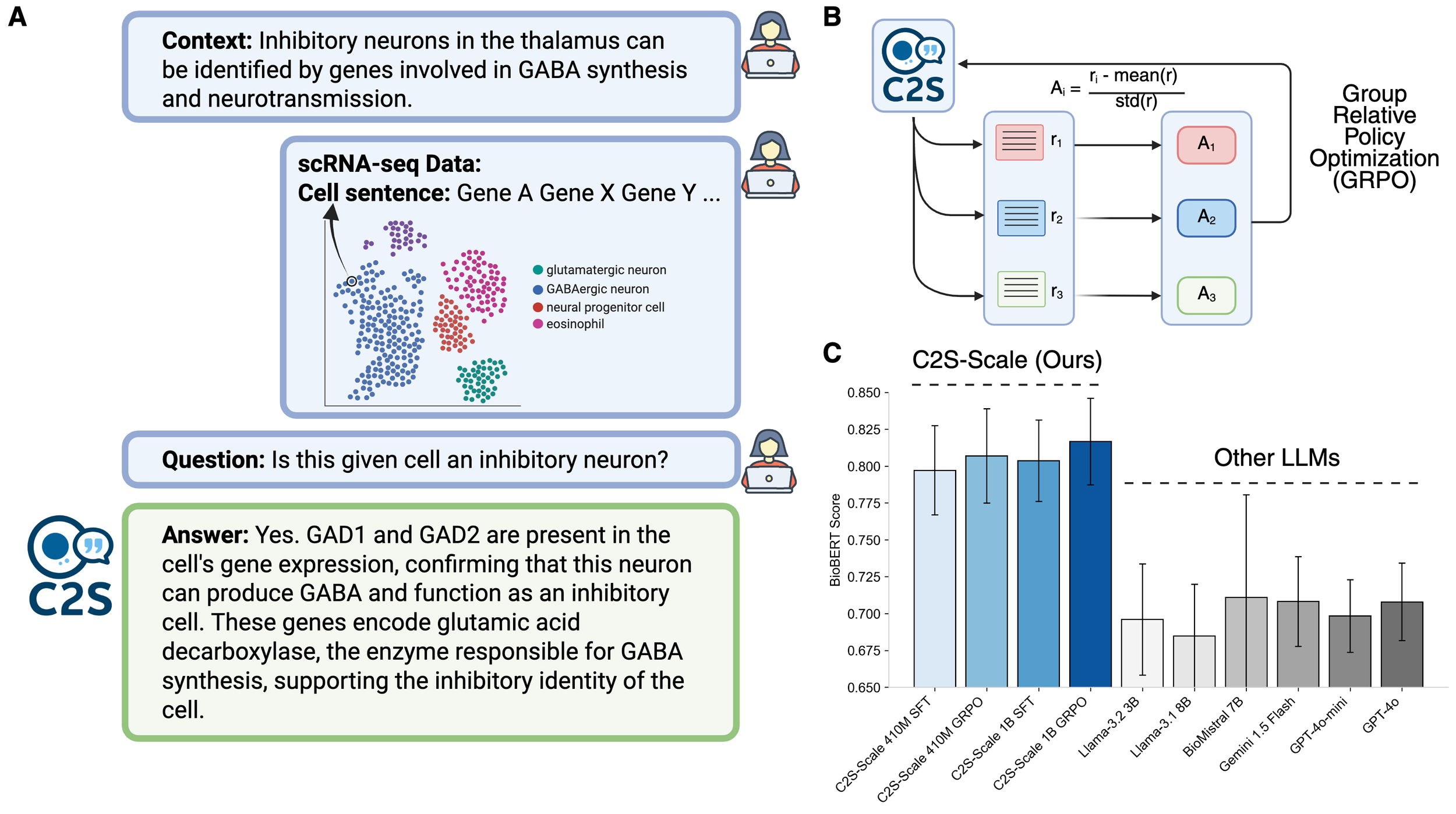

C2S‑Scale is our newest collaboration with Google Research and Google DeepMind. By pre‑training language models of up to 27 billion parameters on one billion+ tokens of “cell sentences,” biomedical text, and rich metadata, then fine‑tuning them with modern reinforcement‑learning techniques, we achieve state‑of‑the‑art predictive and generative performance on complex multicellular tasks. C2S‑Scale moves beyond cell‑level snapshots toward “virtual cells”—in‑silico avatars that enable researchers to run thousands of hypothetical experiments, accelerate therapeutic target discovery, and simulate patient‑specific responses long before a wet‑lab assay.

[Read the preprint →]

Author List

Syed Asad Rizvi*,†,1, Daniel Levine*,1, Aakash Patel*,1, Shiyang Zhang*,1, Eric Wang*,2, Sizhuang He, David Zhang, Cerise Tang, Zhuoyang Lyu, Rayyan Darji, Chang Li, Emily Sun, David Jeong, Lawrence Zhao, Jennifer Kwan, David Braun, Brian Hafler, Jeffrey Ishizuka, Rahul M. Dhodapkar, Hattie Chung, Shekoofeh Azizi2, Bryan Perozzi3, David van Dijk1,‡

*Equal contribution

†Work partially done during internship at Google Research

‡Corresponding author

1Yale University, 2Google DeepMind, 3Google Research